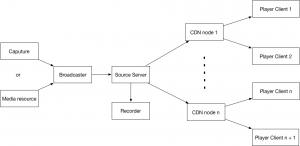

1. How live video streaming works?

There are these key parts in basic streaming process:

- Caputure or media resource

- Broadcaster

- Source server

- Recorder for playback

- Content Delivery Network (CDN) nodes

- Player Clients

The process of live video streaming: The broadcaster (smart phone, OBS or other device) puts streaming from caputure or media resource to source server in Real-Time Messaging Protocol (RTMP) .

The source server’s major duty is transcoding. If clients need playback, the source server could record video in real time and save data in cloud server.

And next, every CDN node can get the streaming from source server, if there are some player clients nearby the CDN. CDN has two functions: loading balance and cache. Then the streaming would be pulled from CDN to clients in suitable protocol.

Like these not complex steps, you can watch the vivid video on your phone.

2. What are the difference in kind of live video protocols?

When I was as a beginner of live video streaming developer, I fell in comfuse in these live streaming protocols. I worked hard to find the difference in them, and made a summary.

-

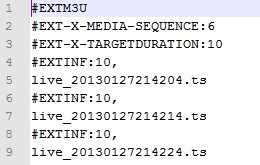

Http live streaming (HLS)HLS is an HTTP-based media streaming communications protocol implemented by Apple Inc. as part of its QuickTime, Safari, OS X, and iOS software. It resembles MPEG-DASH in that it works by breaking the overall stream into a sequence of small HTTP-based file downloads, each download loading one short chunk of an overall potentially unbounded transport stream. As the stream is played, the client may select from a number of different alternate streams containing the same material encoded at a variety of data rates, allowing the streaming session to adapt to the available data rate. At the start of the streaming session, HLS downloads an extended M3U playlist containing the metadata for the various sub-streams that are available. Wikipedia-EN

The format of M3U8 playlist:

#EXTM3U m3u header, must be written in first lin #EXT-X-MEDIA-SEQUENCE sequence of the first .ts segment #EXT-X-TARGETDURATION maximium time duration of each segment #EXT-X-ALLOW-CACHE allow cache #EXT-X-ENDLIST end signal of m3u8 file #EXTINF extra info,info of .ts segment, such as duration, brandwidth, etc.The plist contains many file paths of .ts segment:

Meanwhile, you can learn more introduction from apple.

When clients pull streaming in HLS, they need to download the current playlist generated by server at first, then download .ts segment one by one. So as source server, after generating a playlist, clients have possiblity of pulling this streaming. This is the reason why HLS has high latency problem. In non-HMTL envirement, this protocol isn’t recommended to use.

- Real-Time Messaging Protocol (RTMP)RTMP is a TCP-based data communications protocol, it is applied to media streaming widely. This protocol is real-time transport streaming, so its latency is lower than HLS. The congestion control of TCP can ensure the data integrity. In addition, Adoube provides this protocol, Adoube Flash can play video streaming in RTMP perfectly.The most broadcasters provide this protocal to pushing streaming as well.

- Http-flvHttp-flv transports streaming extented flv in HTTP. HTTP has a key in header called content-length, it rules the body length of request. If don’t add content-length in header, clients will recieve data all the time. The packet based http-flv may be smaller than RTMP, so it has advantage at saving data flow. Besides, the latency of http-flv almost equels to RTMP.

- UDPMost video chat apps perfer using protocol based-UDP, because UDP doesn’t have congestion control. This can decrease latency time to subsecond. But the reason why many companies don’t use it is that they need to refactor the framework of server. So as video chat companies, they have good advantage. Currently due to requirment of extra low latency, many online-education companies use UDP to implement live video streaming products.

3. The problem of live video streaming latency

Different business requirments lead to different technical selector. If customers wanna watch a live hot girl show, 2~5s latency maybe OK, but as online-course, about 1s just can ensure normal teaching needs. There are these reason influncing latency of live video:

- Network speed In the whole process of live video streaming, whatever network speed of from broadcaster to source server or from CDN to player clients, all influence experience of live video. So network speed is a important factor of latency.

-

Live video protocol

Last section, I have discussed the specify of kind of protocols, so I don’t talk about it more.

-

Video encoding algorithm

To a large extent, an effective strategy or a good algorithm can reduce latency. Next section I will introduce video essential and cosider how to make an effective encoding strategy.

4. Video essential

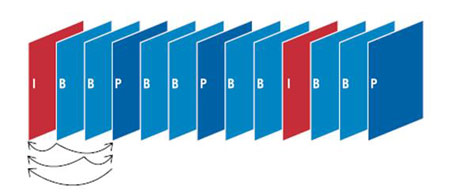

We know that a video consists of many pictures in fact, the pictures are fast play over 24 fps to cheat our eyes. That makes people feel the screen is dynamic. But if a 1080p video playing in 60 fps and isn’t compressed, it will be a huge stuff. I guess one of an intact frame image in this video is about several MB. Therefore, innovating a video encoding for compressing is necessary.

There is a not bad thinking that we just save difference of similiar images in video. That redundancy is deleted makes video smaller.

Let a part of frame hold intact image or least compressible, we call them I frames(Intra-coded frames). If we can seek video to I frame, we call them Key frames. From an I frame to next I frame is a group of pictures, or GOP structure. Those frames holding differece are called reference frames. Predicted picture(P frame) holds only the changes in the image from the previous frame. Bidirectional predicted picture(B frame) saves even more space by using differences between the current frame and both the preceding and following frames to specify its content. Learn more in Wikipedia

From these principles, we know when player decodes a video, B frame need to reference the preceding and following frames. Though B frame makes video size smaller, decoding will spend more time, because decoder need to wait for whole GOP or frame referenced by B frame downloaded in order to decode this B frame in this GOP. If the GOP is long, the time for waiting is long.

5. How does the first frame load fast?

- Caching GOPAs decoder of player,comes across an I frame, the video just will be played. We can suppose that player need to wait 2 second to recieve an I frame, we maybe see the black screen 2s. So player must fast load first I frame for swiftly playing live video. Many companies often make a strategy that cache the current live GOP in CDN. When player clients pull streaming, directly get the last I frame from CDN, it can ensure player plays video immediately.

-

Smaller buffer

The player downloads a bit of data to buffer. When the buffer is empty, the decoder waits for data. When the buffer is full, the decoder works and you would see the content on the screen. The buffer of live video is hundred millisecodn level. Reducing size of buffer could help player to render image on time. But the disvantage is that if network status is bad, buffer often will be empty, user will see the caching tips on screen. So I suggest just reducing size of the first buffer to make image swiftly to show.

-

Selecting nearest CDN

Don’t select a far CDN to pulling streaming, it will spend more time on network route. Perhaps ignoring loading balance is sometimes a good choice for live video.

-

Using UDP

Changing protocol based-TCP to UDP that doesn’t hava congestion control.

-

Using IP in URL

DNS takes long time on network request. Don’t use domain in URL of video, local DNS will be an excellent project for it.

Comments